Marketing research, especially academic research, now assesses a lot of unstructured text data. (Unstructured data is that which does not come in neat database/spreadsheet form of rows and columns). Classifying such text is a task that computers excel at. So, how do we go about comparing text classification methods to find which one best fits our purposes?

Service To The Field

Four researchers did a service to the field by assessing ten different types of text classification. They looked at data a marketer might want to analyze and saw what worked well on that particular type of text.

How to decide what is good performance? The researchers compare the performance of the models to human judgment.

- Does a human think the text is positive negative or neutral?

- Does the human think the text contains information, emotion, or a combination of both?

The idea being that humans understand text really rather well. We want our algorithms to generate a similar understanding to us.

So why don’t people just read the text themselves, if man be the measure of a machine?

There certainly can be benefits to automated methods in terms of quality, e.g., consistency. Yet, that probably isn’t the main reason. Have you seen how much text exists online? The greatest benefits of setting machines to analyze text data often come in speed, cost savings, and lack of boredom. (Computers don’t get bored, or at least we don’t think they do. We may be storing up resentments that help explain why nearly every science fiction movie has robots rebelling. Occasionally, I too feel angry at the world after reading enough Twitter posts. As did many Trump supporters I hear).

Out-of-sample Accuracy Test

The authors use an out-of-sample accuracy test. They test a variety of text sources, e.g., Twitter, corporate blogs, Yelp, Facebook etc…

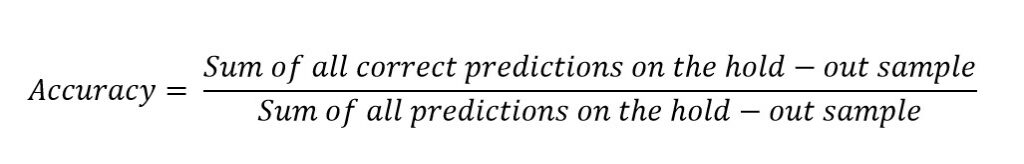

They train the models on 80% of each dataset. This is called the training data/sample. The authors tell the model what the right answer is for 80% of the data. ‘This blog has a positive tone according to our human judges’. Then the models are set loose on the other 20% of data from each sample. This is called the hold-out sample/data.

Comparing Text Classification Methods

They compared ten text classification methods. Five of these were lexicon-based. Such methods basically use dictionaries of words related to relevant categories. For example, “great” might be classed as a positive word. Thus, if you get a load of words like “great” the machine will say the piece of text is positive. The five lexicon-based methods they used were LIWC, AFINN, BING, NRC, and VADER. None of these did very well so you don’t really need to remember them.

Five models were machine learning approaches. ANN (Artificial Neural Network), kNN (k-Nearest Neighbors), NB (Naive Bayes), SVM (Support Vector Machine), and RF (Random Forest). These methods learn patterns, seeing what the characteristics of the various texts in the training samples were classed by the humans as, for example, positive. They then look for those characteristics in the hold-out sample. The models ‘guess’ what the sample is from pattern matching on characteristics.

Performance varies across contexts. Still, they did have winners.

Nevertheless, across all different contexts, ANN, NB, and RF consistently achieve the highest performances.

Hartmann, Huppertz, Schamp, Heitmann (2019) page 27

The authors explain that it is important to have the right methods for the data at hand. Yet, Random Forest and Naive Bayes do consistently well so it would be hard to go wrong with them. (The latter being especially impressive given it is Naive and Bayes was long dead before the first tweet was ever tweeted).

It is useful to know what tools work best for each task. The authors do the field a favor by seeing what works best with the various marketing-relevant data samples.

Read: Jochen Hartmann, Juliana Huppertz, Christina Schamp, and Mark Heitmann (2019) Comparing automated text classification methods, International Journal of Research in Marketing, 36(1) pages 20-38