Social Psychology, and the related field of Consumer Behaviour, relies on laboratory experiments. This has great benefits. Lab experiments give the flexibility to investigate causation and test interesting ideas. They have been very successful. When is too much success a bad thing?

Lab Experimentation

Given the benefits of lab experiments Consumer Behavior scholars doing experimental work have come to dominate marketing. I’d guess about 2/3rds of marketing academics study Consumer Behavior. Unfortunately, lab experiments come with downsides. They create data for a specific purpose. Unfortunately, some scholars have succumbed to the — admittedly massive — temptation to fraudulently create favorable data. Some journals have started to ask for data to be shared. This should, at a minimum, allow us to catch the most incompetent of the fraudsters. Still, the problem undermine the entire field.

There exists a broader problem. Even scholars who would never simply make up the data can fall into traps. Researchers must drop unintelligible responses. It is easy to see how someone could, even subconsciously, drop a few unhelpful responses. There are any number of good reasons to drop data. A scholar might, unconsciously, see unhelpful data as more likely to be flawed. After all, they are more likely to check data that doesn’t say what they expect. When the sun rises each morning we don’t question it because that is what we expect.

Furthermore, journals don’t publish inconclusive results. When experiments don’t work everyone ignores them. This means published effects give a false impression of the likelihood of a positive result. We see the five published studies that show an effect. Sadly, we know nothing about the ten studies that failed to do so. We think that the effect is robust. But it may be more questionable.

When Is Too Much Success A Bad Thing?

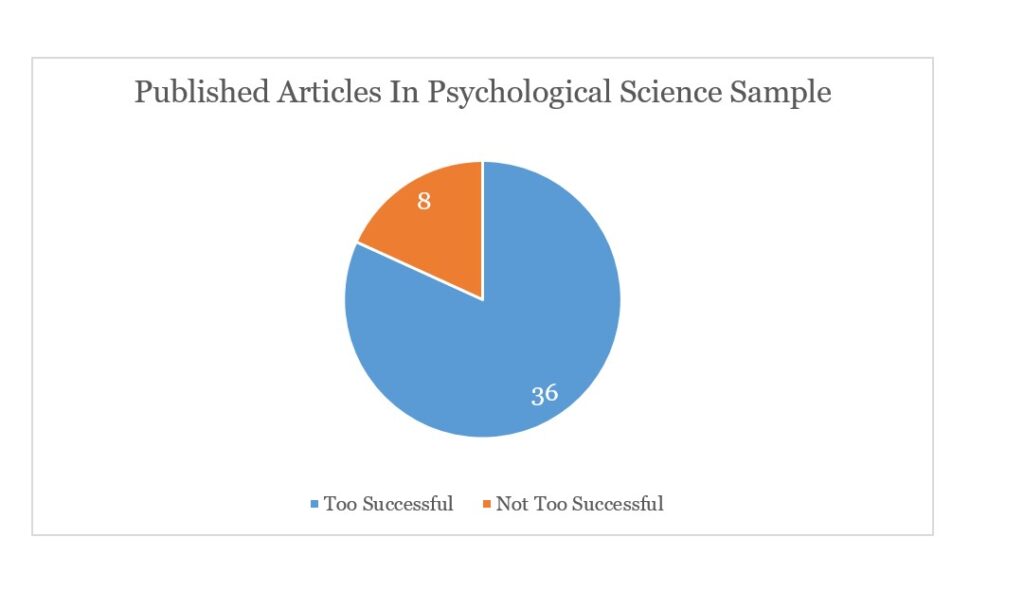

Francis (2014) looked at papers in Psychological Science with 4+ studies and found worrying results. The method he used is controversial, so some would argue with it. Still, he finds that 36 out of 44 articles in Psychological Science were excessively successful, i.e., too good to be true.

…82% of articles in Psychological Science appear to be biased…

Francis, 2014, page 1185

He cannot say why this occurred. Some explanations don’t involve fraud or incompetence. Still, it really isn’t great news.

When empirical studies succeed at a rate much higher than is appropriate for the estimated effects and sample sizes, readers should suspect that unsuccessful findings have been suppressed, the experiments or analyses were improper, or the theory does not properly account for the data.

Francis, 2014, page 1180

More Independent Theory Needed

A solution that appeals to me is for marketers to ensure that we draw more on theory and ideas completely independent of other laboratory experiments. Academic literature reviews shouldn’t just be lists of other very similar experimental papers. They should tell us how the lab experiments fit with non-experimental papers. Why not give real-world examples? These really help to get away from fears it is just made up in the lab.

I wrote this in 2015. There has been huge amount more on such problems in recent years. For more on the replication crisis in psychology see here.

For more on challenges in academia see here.

Read: Gregory Francis (2014) The frequency of excess success for articles in Psychological Science, Psychonomic Bulletin & Review (2014) 21, pages 1180-1187