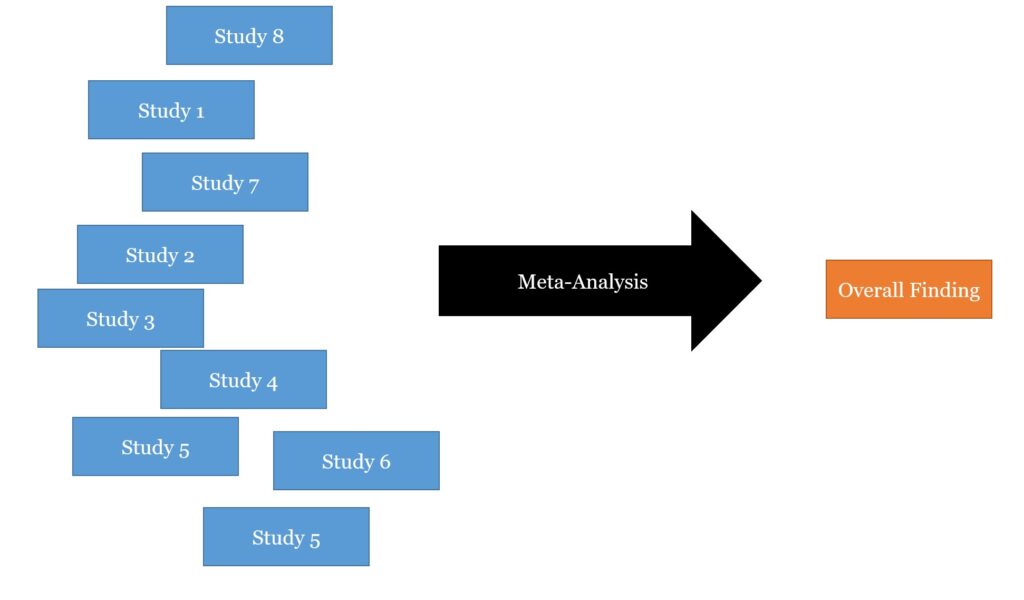

How marketing impacts firm value is an important question facing for-profit businesses. In an ambitious paper Conchar, Crask and Zinkhan (2005) examined this. they did a meta-analysis: combining many results into one.

Meta-Analysis Seem Like Hard Work

The hard work involved in this paper is impressive. The authors looked at 88 studies of advertising and promotion. They noted the relationship to what they say are measures of market value. After that, they conducted a meta-analysis. This is a way of combining many studies into a single study. This is a technique that seems especially popular in psychology. The basic idea is that we can see whether any single study was an anomaly by looking at several similar studies and comparing the outcomes.

Unfortunately, academia mitigates against the success of many meta-analysis. (At least the structure of academia). I don’t want to be too negative, often meta-analyses can add new insight. Still, I worry that Conchar and colleagues suffered from the typical problems of meta-analyses while adding some new ones. Given they can only analyze studies that have already been done they are limited by the quantity and quality of past work. To be fair that is true of all meta-analyses.

The File Drawer Problem

Further common challenges include the file drawer problem. Generally only successful tests get published. Journals, for understandable reasons, don’t want to print that Professor XYZ did a load of work which completely failed to get an interesting result. If you just use reported data, therefore, it looks like everything worked. Best practice, and to be fair the authors did this, is to ask for unpublished studies. This is a sensible approach. Yet, even the hardest working researcher who reaches out to everyone possible would be unwise to suggest that negative and positive results have an equal chance of representation. The dramatic or novel is always more likely to be reported.

Combining Different Studies

I think another problem of academia is probably most challenging for these particular authors. It is very hard to win plaudits for “replications”. Doing the same thing as someone else. We all want to do exciting new stuff. To be published each new study must be a bit different from the last. In the Conchar piece, this means their market value measures are nearly all different. Given the outcome each study predicts is different I’m not sure what it means to put them together into a single analysis.

Meta-Analysis: Combining Many Results into One

To see the problem think of market value as analogous to the size of person. We want to see what is associated with a person’s size. Unfortunately, the size construct appears in many different forms. For example, height, weight, comparison to the average person in your area, comparison to average person of the same gender worldwide, etc…

The inputs are all different too. We look at men, women, kids, babies, older people etc… The resulting connections will be extremely messy. Babies might get taller with more food but adults won’t. Where you live will likely associate with size but not if you correct by local averages. We need some sort of theory of what we expect to happen in order to make sense of it. A theory is largely missing from this work. The authors largely admit the problem.

the choice of the most appropriate criterion measure should ultimately be grounded in theory rather than based upon measurement considerations.

Conchar, Crask and Zinkhan, 2005, page 456

In academic-speak this means don’t just throw a bunch of stuff you don’t understand into a statistical model and see what happens.

Lack Of Causation

In their model they can’t speak to causes. So if they see high marketing spend associated with high market value they aren’t sure if marketing increases market value. Perhaps instead having a high market value allows you to splurge on marketing. This seems like it is a pretty crucial distinction. I don’t want to be too negative, I admire the work put in.

For related challenges see here and here.

Read: Margy P. Conchar, Melvin R. Crask, and George M. Zinkhan. (2005) “Market valuation models of the effect of advertising and promotional spending: a review and meta-analysis.” Journal of the Academy of Marketing Science 33 (4) pages 445-460.