Philip Tetlock’s book on expert political judgment was a classic. That said, he clearly thinks that the message taken from that book was too strong. Previously he suggested that experts just aren’t that good at forecasting. He still retains that theme in his new work — but now he is more interested in improving forecasting.

Improving Forecasting

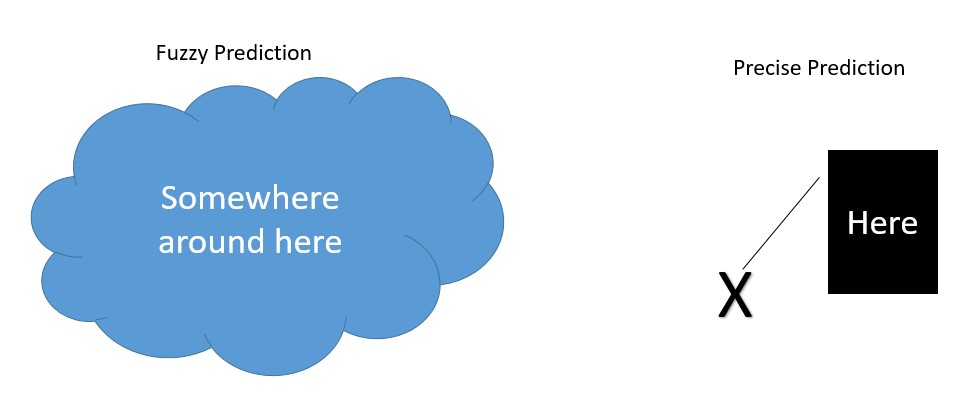

Tetlock and his co-author talk about how expert forecasts are vague and so unfalsifiable.

“Fuzzy thinking can never be proved wrong”

Tetlock and Gardner, 2015, page 252

We clearly need less fuzzy thinking if we are to get better at forecasting. The good news is that Tetlock thinks that we can improve. We might never be perfect but we can nudge forward. He talks about why forecasts often don’t improve.

The consumers of forecasting — governments, business, and the public — don’t demand evidence of accuracy.

Tetlock and Gardner, 2015, page 14

He thinks too much forecasting is about sounding good rather than developing testable predictions. Never testing and finding your mistakes means you can never learn from them.

What Then To Do To Go About Improving Forecasting?

He suggests one way to improve forecasting is to break the problem down into easier to understand parts. I think his ideas apply to a lot of work in metrics. Expressing something clearly allows you a chance to be visibly wrong and so improve. This is vastly superior to vague nonsense. I agree with Tetlock: “… don’t suppose that because a scoring system needs tweaking it isn’t a big improvement” (Tetlock and Gardner, 2015, page 261).

Flush Ignorance Into The Open

His advice is clear and widely applicable.

“Flush ignorance into the open. Expose and examine your assumptions. Dare to be wrong by making your best guesses. Better to discover errors quickly than to hide them behind vague verbiage”

Tetlock and Gardner, 2015, page 278

There is a lot that can be learned from listening to Tetlock. I’ll predict that the world will be a better place if we pay attention to the advice he shares. Sadly this isn’t really a testable prediction given “better place” is a little vague but hopefully you get my point.

For more on Philip E. Tetlock’s work see here. And for Nate Silver’s book on prediction see here.

Read: Philip E. Tetlock and Dan Gardner (2015) Superforecasting: The Art and Science of Prediction, McClelland and Stewart.